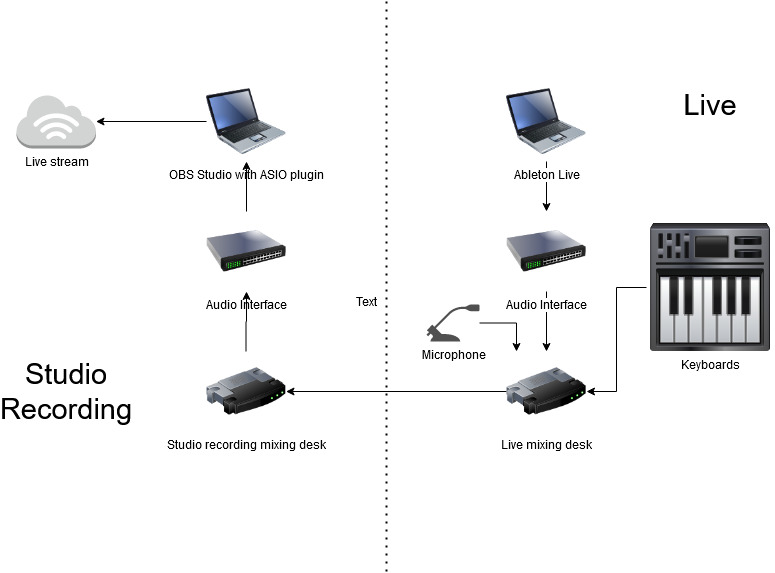

After having played live for more then one and half year I was eying this baby: the Akai Force. My live setup when playing solo, or as a duo now with a bass player, centers around Ableton Live on a laptop with a Focusrite sound interface. In previous articles you can find out how I use Ableton Live live and it is great to be able to play songs live, just like they have been recorded in the studio.

Now I am also playing more acoustic (Stripped) versions of my song, without a laptop and that is also because in a way it still feels like cheating to use the computer to play along when playing live. I know it is now very common to play like this and my audience never questioned it, but still it bothers me that people might think I am checking my email while playing a live show. Of course, to prevent me constantly working on the laptop I control it with a Novation Launchpad, but I do have to check the laptop screen once in a while.

Enter the Akai Force. Not only an MPC (Midi/Music Production Center/Controller) , but also a standalone live instrument. It promises to be able to read an Ableton Live set and converting it to an MPC live set. This way it potentially does a way with the laptop, sound interface and launchpad. It is all in one and looks like a music instrument. These are my first impressions of the Akai Force.

Unboxing it, reveals a heavy and sturdy device. Larger then an Ableton Push, but the same idea. It has a nice touch screen, and it strangely wants you to either start a new project or open an existing, before allowing you to access other functions, like browsing or configuring the device. It is possible to change this behavior in the preferences.

The device starts up in Standalone mode, but can be put into Computer mode. In this mode it allows access to connected storage, but strangely not internal storage. It also turns into a dumb audio interface in Computer mode. The best thing to do first is to mount an 2.5 inch SSD drive inside. There is a lid that has room to add an SSD and this was actually for me a pleasant surprise. I thought I had to replace the internal storage. Internal storage is only 16 GB of which 6 GB is available. After initializing, formatting (eFat) and naming the internal drive it now shows up as Force SSD. Nice.

After copying my Ableton Live live set it all begins. This was my first real unpleasant surprise. My live set runs itself, if I let it, by using Follow Actions on clips and scenes in the live set. None of this works on the Force. Fortunately this can be simulated by cutting up the songs where I used this in separate live sets and creating an Arrangement. The Arrangements import perfectly in the Force. Also it is still possible to leave the arrangement and trigger clips and scenes yourself manually. Phew!

Another unpleasant surprise. Only 8 audio tracks in a live set. Yes, I was not well prepared for this I must admit. It was quite hard to find these kind of details. I only found it in forum discussions. This needs some work on my side to review and mixdown parts of the live sets before importing. I’m still working on that.

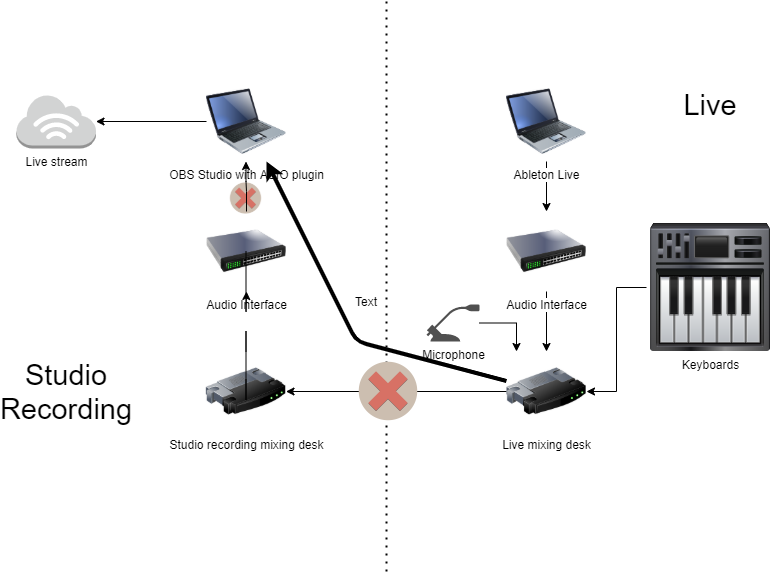

Then a nice surprise, the internal mixer outputs to two sets of outputs 1/2 and 3/4. This quite nicely works with my in-ear monitoring channel with clicks and the live mix without click. The number of inputs however seems limited with only two. Only enough to accommodate me playing solo. Not enough for my bass player, so I still have the need for a separate live mixer when doing a show.

Next steps for me to find out is the effect section for playing live and also using it as a musical instrument live and for production. Stay tuned for more about this new addition to the studio. If you have experience with the Force, please comment or send me a message! Let me know what other questions you have about the Force!